Live

France24 English News Live StreamGlobal News

Global News Video PlaylistPBS

PBS News Video PlaylistNewsroom Features

MWC Barcelona 2026: Inside “The IQ Era” of Connected Intelligence

See Special Report: MWC Barcelona 2026: Inside “The IQ Era” of Connected Intelligence

Published Saturday February 28, 2026

President Trump’s 2026 State of the Union: Key Moments, Party Reactions, and What It Means for the Midterms

See Special Report: President Trump’s 2026 State of the Union: Key Moments, Party Reactions, and What It Means for the Midterms

Published Wednesday February 25, 2026

2026 Winter Games & Paralympics: Global Triumphs, Breakthrough Moments and The Road to the French Alps 2030

See Special Report: 2026 Winter Games & Paralympics: Global Triumphs, Breakthrough Moments and The Road to the French Alps 2030

Published Tuesday February 24, 2026

The Fat Tuesday-Ash Wednesday Connection: From Feast to Reflection

See Special Report: The Fat Tuesday-Ash Wednesday Connection: From Feast to Reflection

Published Friday February 20, 2026

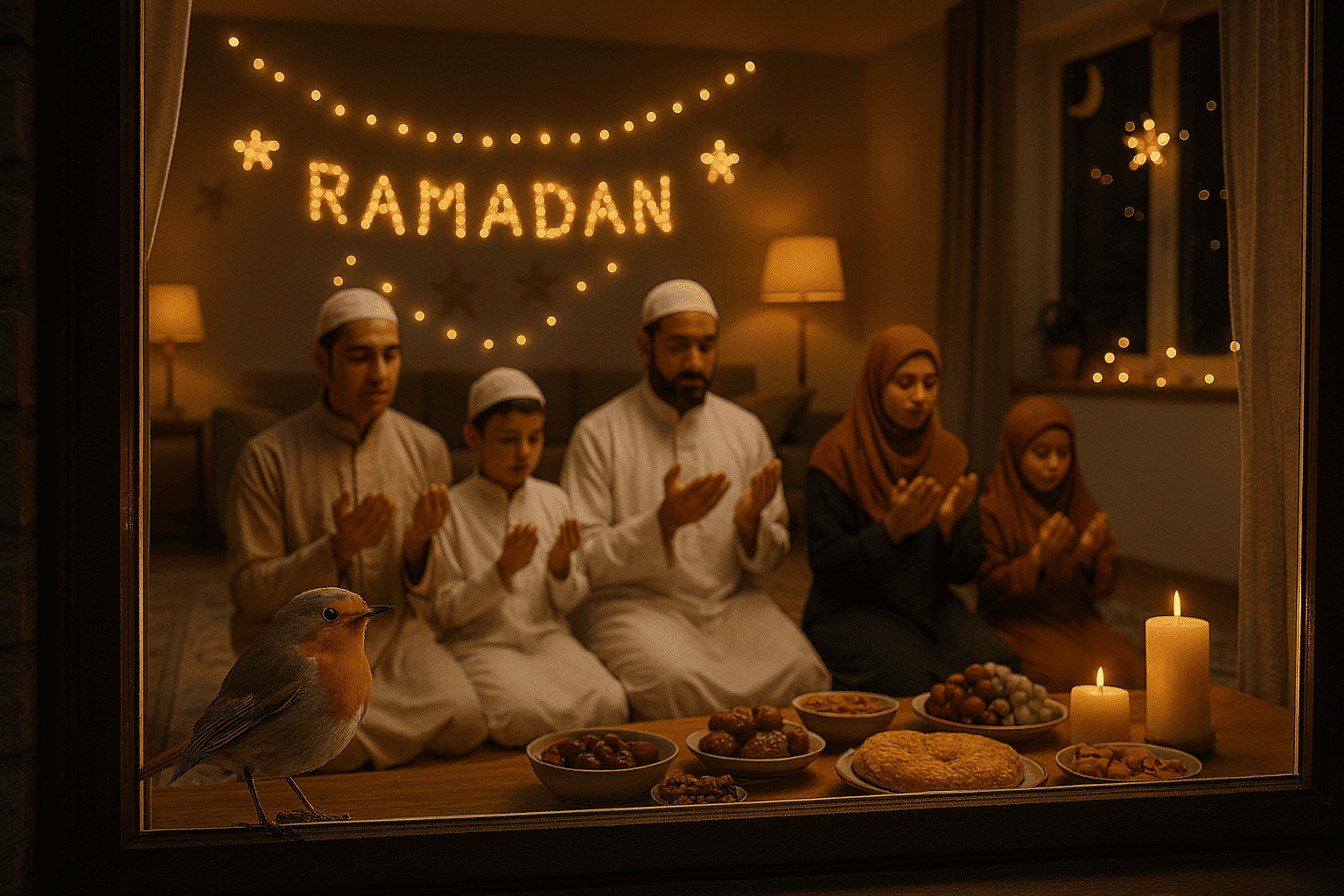

Ramadan 2026: A Month of Fasting, Faith, and Global Community

See Special Report: Ramadan 2026: A Month of Fasting, Faith, and Global Community

Published Wednesday February 18, 2026

Marketing Ideas for Your Painting Company

See Contributor Story: Marketing Ideas for Your Painting Company

Published Wednesday February 25, 2026

4 Upgrades That Make Your Home Stand Out

See Contributor Story: 4 Upgrades That Make Your Home Stand Out

Published Tuesday February 24, 2026

Tips for Making Your Home Entryway More Attractive

See Contributor Story: Tips for Making Your Home Entryway More Attractive

Published Monday February 23, 2026

How Early Design Choices Affect Fluid Systems

See Contributor Story: How Early Design Choices Affect Fluid Systems

Published Sunday February 22, 2026

Do Modifications Hurt or Help Classic Corvette Value?

See Contributor Story: Do Modifications Hurt or Help Classic Corvette Value?

Published Saturday February 21, 2026